Exploring Techniques for Tuning Large Language Models (LLMs)

As the field of artificial intelligence advances rapidly, it has become increasingly crucial to make the most of large language models (LLMs) in an efficient and effective manner. However, there are many different ways in which LLMs can be used, which can be daunting for beginners.

Basically, we can utilize pretrained LLMs for new tasks in two primary approaches: in-context learning and finetuning. This article will provide a brief overview of what in-context learning entails, followed by a discussion of the different methods available for finetuning LLMs and the steps to create a dataset for fine-tuning LLM for the task of Question Answering specifically.

In-context learning

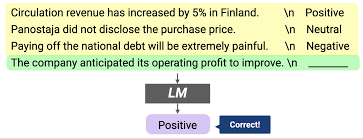

Large language models (LLMs) that are trained on a general text corpus have the ability to learn in-context. This means that it is not necessary to further train or fine-tune the pretrained LLMs to perform specific or new tasks that were not explicitly trained on. Instead, by providing a few examples of the target task via the input prompt, the LLM can directly learn the task, as shown in the example below.

If we are using the model through an API, in-context learning can be particularly beneficial since we may not have direct access to the model.

Hard/Discrete prompt tuning

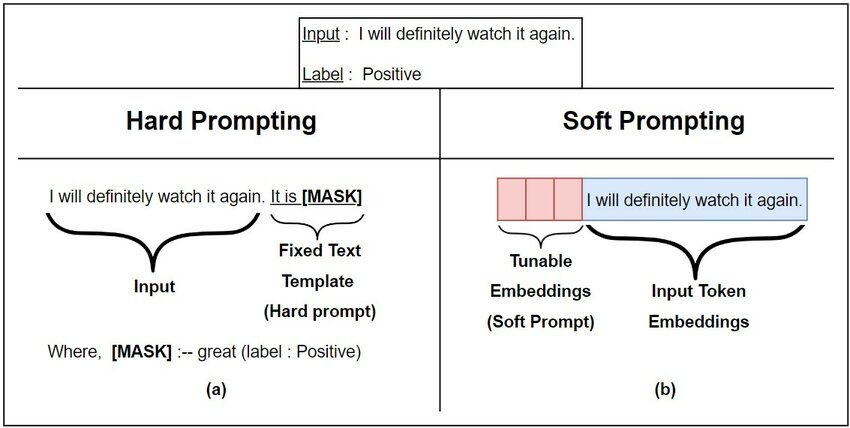

Prompting refers to a method of incorporating additional information into a model’s output generation process. This is typically done by adding a set of text tokens to the input text, either before or after the original text, in order to transform the task into a masked language modeling problem. This process of modifying the input text with a prefix or suffix text template is known as hard prompting. The purpose of hard prompting is to provide the model with more context and guidance for generating the desired output, whether it be for classification or generation tasks.

For example: You can modify the prompt from

1

What is of 15^2 / 5?

to

1

Calculate the value of 15^2 / 5

The method of prompt tuning is a more cost-effective option compared to fine-tuning the model’s parameters. However, its effectiveness is usually not as good as fine-tuning, since it does not update the model’s parameters specifically for a given task, which may hinder its ability to adapt to unique characteristics of the task. Additionally, prompt tuning may require significant effort, as it frequently involves human participation in assessing and comparing the quality of different prompts.

Soft prompt tuning

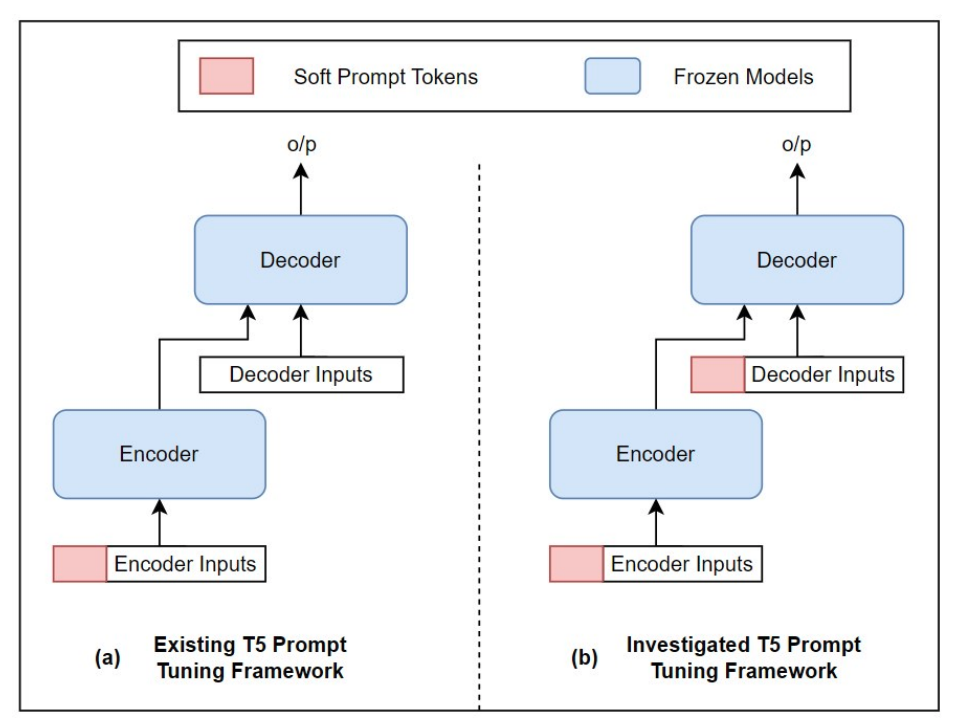

Soft prompting is a technique of adding an adjustable input embedding to the primary input of the model and optimizing those embeddings. This method offers better outcomes compared to hard prompting, and it is comparable to complete model fine-tuning for natural language processing (NLP) tasks such as paraphrase detection and question-answering generation.

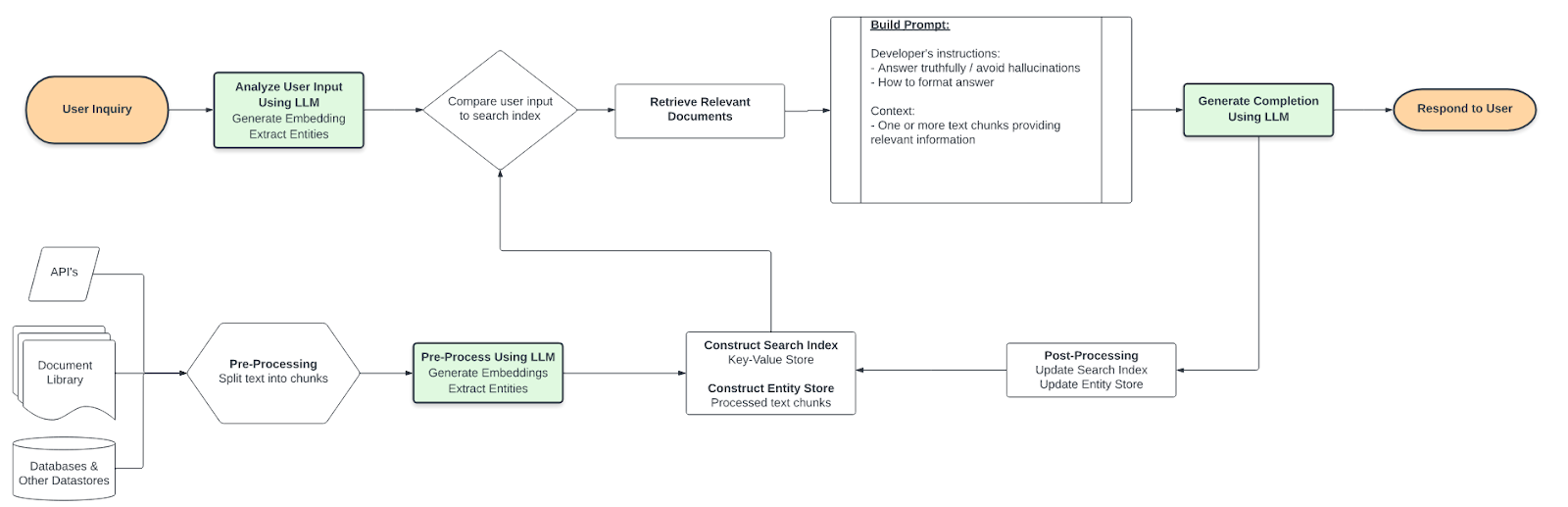

Indexing for IR (Converting into Embeddings)

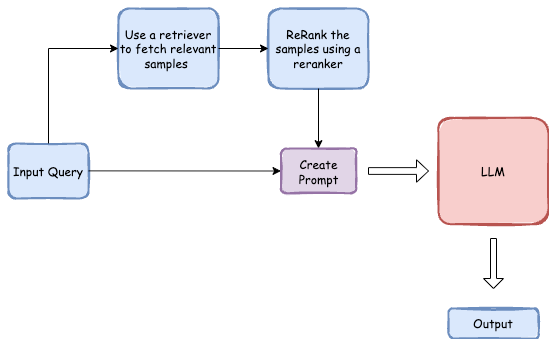

In the domain of large language models, indexing can be considered a way to use in-context learning to transform LLMs into retrieval systems that can extract information from external sources and websites. This involves using an indexing module to divide a document or website into smaller sections, converting them into vectors that can be stored in a vector database. When a user inputs a query, the indexing module computes the vector similarity between the query and each vector in the database, and returns the top k most similar vectors as the response.

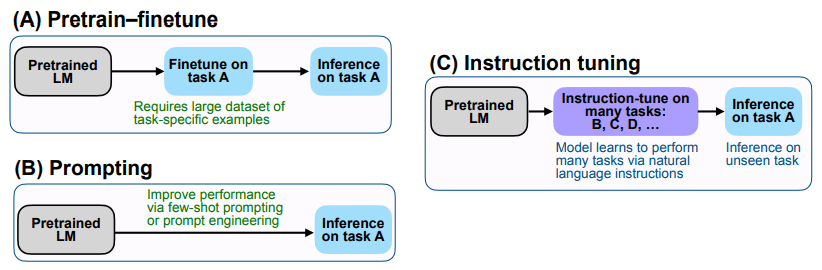

Fine-tuning

Fine-tuning could be of three types:

Feature based fine-tuning:

The feature-based approach involves utilizing a pre-trained LLM on the target dataset to generate output embeddings for the training set. These embeddings are used as input features to train a classification model. Although this approach is commonly used for embedding-focused models like BERT, it can also be applied to extract embeddings from generative GPT-style models.

Fine-tuning to update the output layers

In this method, we maintain the parameters of the pre-trained LLM as they are and only train the newly added output layers, similar to how we train a small multilayer perceptron or a logistic regression classifier on the embedded features.

- Updating all layers The best but most computationally expensive method of fine-tuning is by updating all the layers of the model. The parameters of the pretrained LLMs are not frozen rather finetuned so it can be a computationally prohibitive solutions in LLMs.

Comparison of different tuning tasks

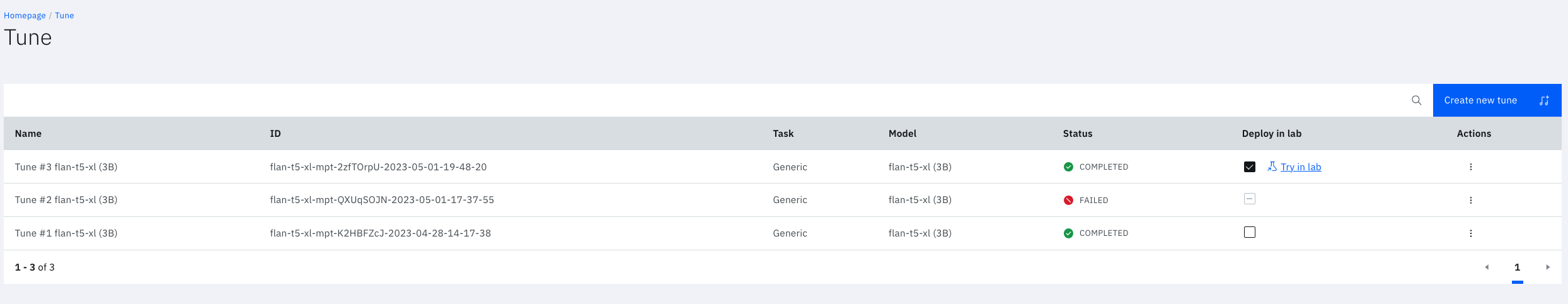

Use-case: fine-tuning LLM for Question Answering

Let’s consider the use case of building a Question answering system using LLM. In order to fine-tune a base generative model like Llama, FLAN-T5 etc., you need to first create a dataset with question, context and answers. The steps for creating such dataset are as follows:

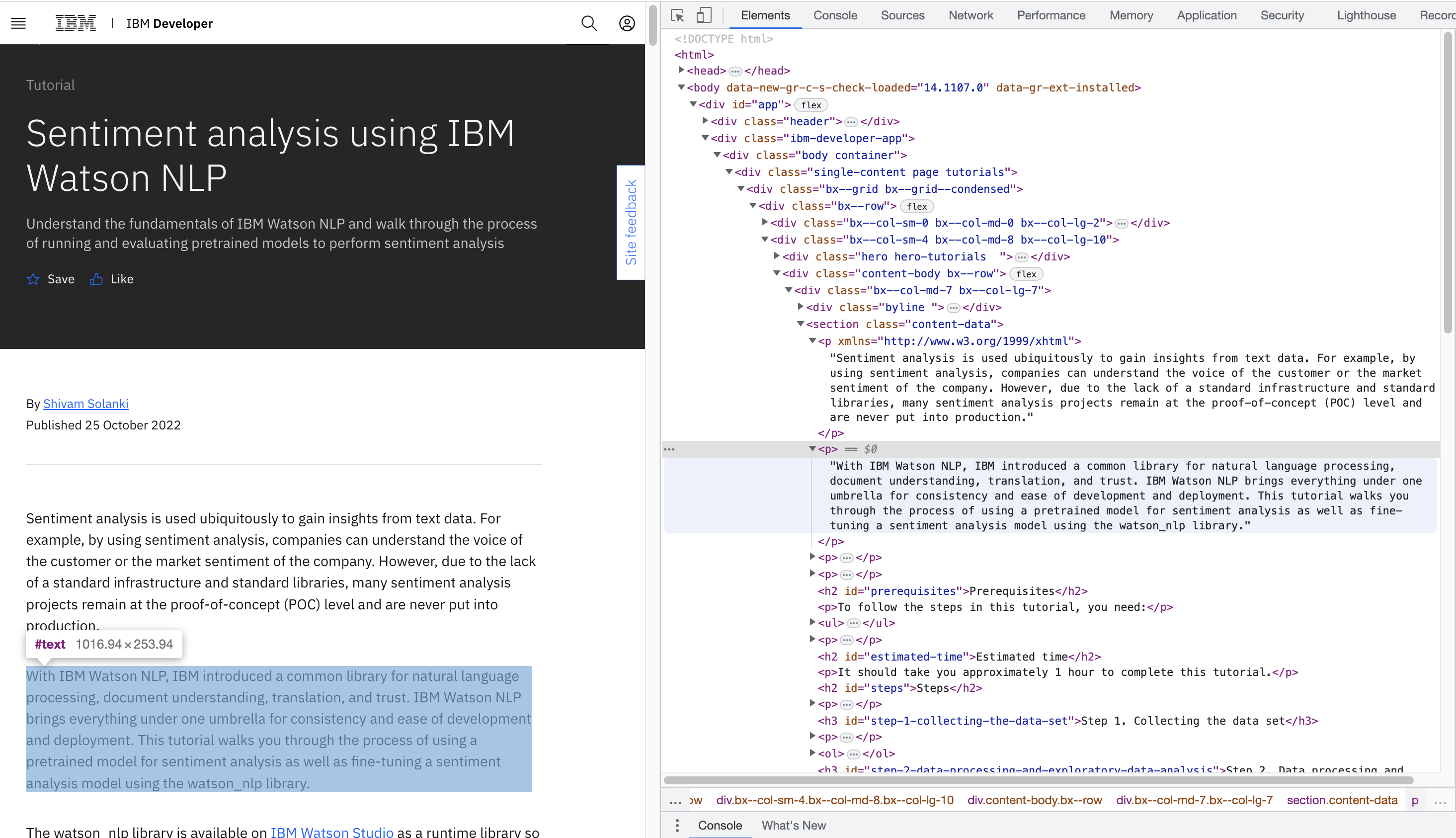

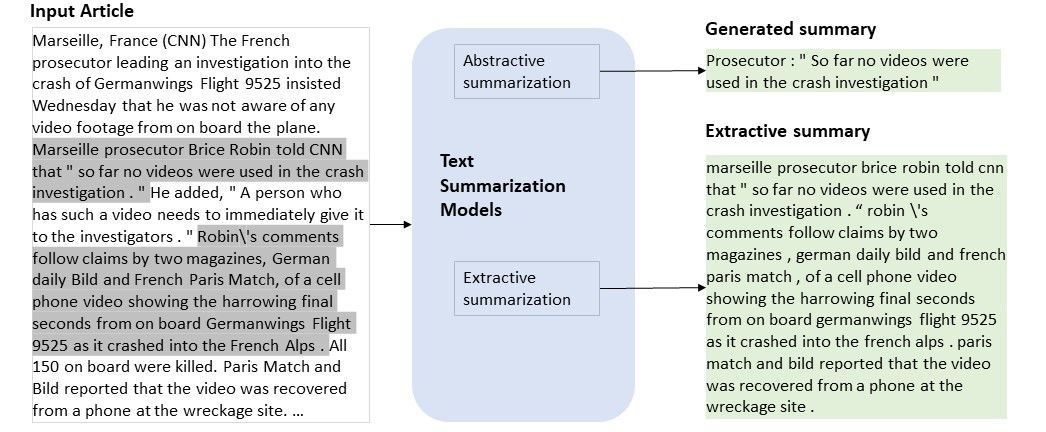

Step1: Summarization

Leverage summarization to create summary from the documents/passages

Summarize passage using FLAN-UL2 (BAM endpoint). The Flan-UL2 is a type of encoder-decoder model that follows the T5 architecture. To improve its performance, it was fine-tuned using a technique called “Flan” prompt tuning and a dataset collection.

Step2: Question Generation

Use passage (context) and answer (summary) to create questions using t5-small-question-generator model. The model is designed to generate questions from a given context and answer, following a sequence-to-sequence approach. It takes the answer and context as input and produces the corresponding question as the output.

Step 3: Finally Questions, context and answer

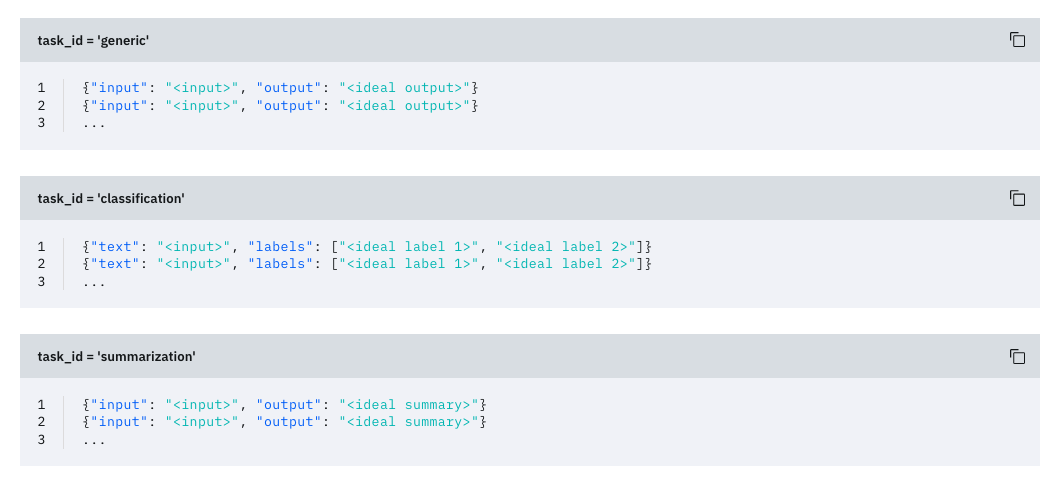

Convert the data (question, context and answer) into the format required for fine-tuning the BAM model

Now these data points (question, context and answer) can be used to fine-tune any Large language model so that it can be used specifically for the purpose of Knowledge retrieval and question answering on specific domain/corpus/dataset.

Stay tuned for the next blog on Knowledge Retrieval system using LLM.